Breaking Down Kubernetes Interviews – One Pod at a Time!

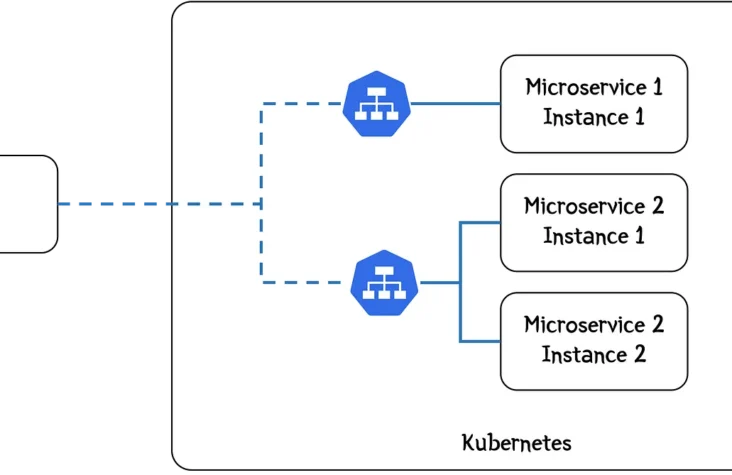

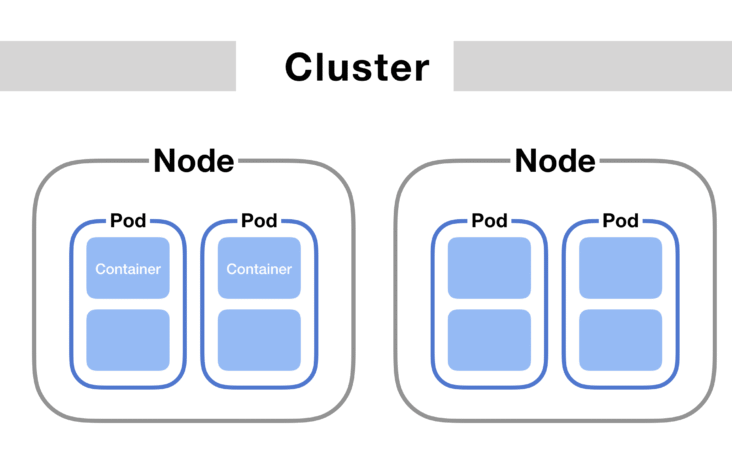

Introduction: Why Container Orchestration? Problem Statement:As microservices-based applications scale, managing containers across multiple environments manually becomes inefficient and error-prone. Solution:Container orchestration automates the deployment, scaling, networking, and lifecycle management of containers. Key Benefits of Kubernetes …