Managing Containers Without Kubernetes: A Glimpse Into Challenges and Solutions

Imagine a world without Kubernetes. A world where containers, those lightweight, portable units of software, existed, but their management was a daunting task. Let’s delve into the challenges that developers and operations teams faced before the advent of this powerful tool. A World Without Kubernetes Before Kubernetes, managing containers was a complex and error-prone process. Here are some of the key challenges: Alternatives Without Kubernetes A Real-World Scenario: A Microservices Architecture Consider a typical microservices architecture, where a complex application is broken down into smaller, independent services. Each service is deployed in its own container, offering flexibility and scalability. Without Kubernetes: Kubernetes to the Rescue Kubernetes revolutionized container orchestration by automating many of these tasks. It provides a robust platform for deploying, managing, and scaling containerized applications. The Challenges of Managing Containers Without Kubernetes The absence of Kubernetes would force us to rely on fragmented tools and custom solutions, each addressing a piece of the orchestration puzzle. The challenges of scaling, monitoring, and ensuring reliability would increase operational complexity, delay deployments, and impact developer productivity. Thankfully, Kubernetes exists, empowering us to focus on building applications rather than worrying about infrastructure.

AWS S3 : All you need to know about S3

AWS S3 is crucial for storage as it offers scalable, durable, and secure object storage. It provides benefits like unlimited storage capacity and high availability, enabling easy access to data from anywhere, anytime. To know more about S3 and how the S3 Lifecycle works, watch this tutorial. Subscribe to my channel for more such videos. AWS S3 (Simple Storage Service) is a powerful cloud storage solution that provides highly scalable, durable, and secure object storage. It offers benefits such as unlimited storage capacity, cost-effective pricing models, and high availability. With S3, you can easily store and retrieve any amount of data at any time, from anywhere on the web. One of the key features of AWS S3 is the S3 Lifecycle, which allows you to manage your objects so that they are stored cost-effectively throughout their lifecycle. This feature enables automated transitions between different storage classes based on defined rules, helping you optimize costs while ensuring that your data is always available when needed. Key Benefits of Amazon S3: S3 Lifecycle Policies: Managing Data Cost-Effectively One of the powerful features of S3 is its lifecycle management capabilities. S3 Lifecycle Policies enable you to define rules to automatically transition objects between different storage classes or to delete them after a specified period. This is particularly useful for managing storage costs while maintaining the availability and durability of your data. How S3 Lifecycle Works: To dive deeper into the workings of AWS S3 and the S3 Lifecycle management, watch this detailed tutorial. If you’re interested in cloud computing, don’t forget to subscribe to my channel for more insightful videos! Scenario-Based Interview Questions and Answers 1. Scenario: You need to store large amounts of data that is infrequently accessed, but when accessed, it should be available immediately. What S3 storage class would you use? Answer:For this scenario, the S3 Standard-IA (Infrequent Access) storage class would be ideal. It is designed for data that is accessed less frequently but requires rapid access when needed. It offers lower storage costs compared to the S3 Standard class while maintaining high availability and durability. 2. Scenario: You are working on a project where cost optimization is crucial. You want to automatically move older data to a less expensive storage class as it ages. How would you achieve this? Answer:You can achieve this by configuring an S3 Lifecycle Policy. This policy allows you to define rules that automatically transition objects to different storage classes based on their age or other criteria. For example, you can set a rule to move objects from S3 Standard to S3 Standard-IA after 30 days, and then to S3 Glacier after 90 days for further cost savings. 3. Scenario: A critical file stored in S3 is accidentally deleted by a team member. How can you ensure that files can be recovered if deleted in the future? Answer:To protect against accidental deletions, you can enable S3 Versioning on the bucket. Versioning maintains multiple versions of an object, so if an object is deleted, the previous version is still available and can be restored. Additionally, enabling MFA Delete adds an extra layer of security, requiring multi-factor authentication for deletion operations. 4. Scenario: You are dealing with sensitive data that needs to be encrypted at rest and in transit. What options does S3 provide for encryption? Answer:AWS S3 offers several options for encrypting data: Additionally, S3 supports encryption in transit via SSL/TLS to protect data as it travels to and from S3. 5. Scenario: You are managing a large dataset of user-generated content on S3. This content is frequently accessed for the first 30 days but becomes less relevant over time. How would you optimize storage costs using S3 lifecycle policies? Answer: To optimize storage costs, I would implement an S3 Lifecycle Policy that transitions objects from the S3 Standard storage class to S3 Standard-IA (Infrequent Access) after 30 days, as these objects will be less frequently accessed but still need to be available quickly. After 90 days, I would transition the objects to S3 Glacier for long-term archival storage. If the content is no longer needed after a certain period, I could also set an expiration rule to delete the objects after, say, 365 days. 6. Scenario: Your team accidentally uploaded sensitive data to an S3 bucket that should have been encrypted but was not. What steps would you take to secure the data? Answer: First, I would identify and isolate the sensitive data by restricting access to the S3 bucket using an S3 bucket policy or IAM policy. Then, I would use S3’s server-side encryption (SSE) to encrypt the data at rest. If the data needs to remain accessible, I would copy the unencrypted objects to a new bucket with encryption enabled, and then delete the original unencrypted objects. I would also set a bucket policy that enforces encryption for all future uploads to ensure compliance. 7. Scenario: You have a large number of small files in an S3 bucket, and you notice that your S3 costs are higher than expected. What could be causing this, and how would you address it? Answer: The increased costs could be due to the high number of PUT and GET requests, as S3 charges for both storage and requests. To reduce costs, I would consider aggregating small files into larger objects to reduce the number of requests. Additionally, I would evaluate whether S3 Intelligent-Tiering is appropriate, as it automatically moves objects between two access tiers when access patterns change, which could further optimize costs for frequently and infrequently accessed data. 8. Scenario: Your company needs to ensure that critical log files stored in S3 are retained for compliance purposes for at least 7 years. However, they are not accessed frequently. What would be your approach? Answer: I would store the log files in the S3 Glacier storage class, which is designed for long-term archival and offers a lower cost for data that is rarely accessed. To comply with the 7-year retention requirement, I would create an

Do you know, How does AutoScaling Works in AWS ?

AutoScaling in AWS !! It’s a very hot topic and we need to understand how autoscaling happens , how does the load balancer work. Have you ever thought how Netflix, Hotstar and Amazon handle their load in peak hours. They have great scalable architecture with load balancer and multiple components which together helps them to handle the load. In this video, I have talked about Network Load Balancer. Subscribe, Share and Like.

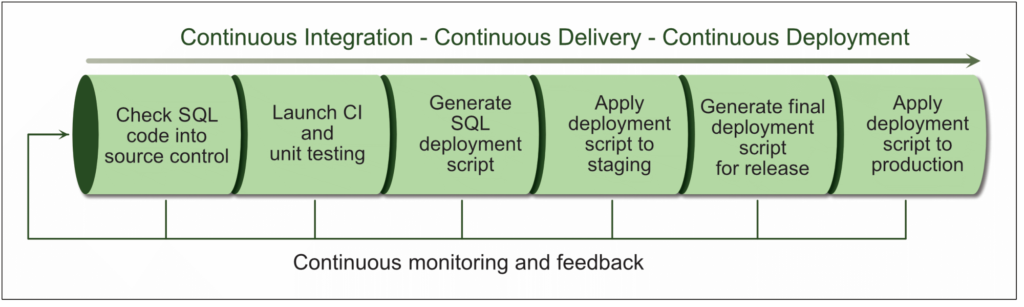

Introduction to Database DevOps

Many companies use automated processes (like pipelines) to manage their software code, deploy it, test it, and set up their computer systems. However, when it comes to working with databases (which store important data), they often don’t use these same automated methods. Instead, they handle databases in a separate way, and this causes a lot of problems. It’s now time to start using automation for databases too. What is Database DevOps? Database DevOps is a method that helps speed up and improve the way software is created and released. It focuses on making it easier for developers and operations teams to work together. When you want to create reliable products, it’s essential to make sure that databases and software work well together. With DevOps, you can build and launch both the software and the database using the same setup. We use DevOps techniques to handle database tasks. We make changes based on feedback from the stages where we deliver and develop applications. This helps ensure a smooth delivery process. Database DevOps Features : Database DevOps products typically have the following features: The Database Bottleneck (Source: Liquibase) A 2019 State of Database Deployments in Application Delivery report found that for the second year in a row, database deployments are a bottleneck. 92% of respondents reported difficulty in accelerating database deployments. Since database changes follow a manual process, requests for database code reviews are often the last thing holding up a release. Developers understandably get frustrated because the code they wrote a few weeks ago is still in review. The whole database change process is just a blocker. Now, teams no longer have to wait for DBAs to review the changes until the final phase. It’s not only possible but necessary to do this earlier in the process and package all code together. Top Database DevOps Challenges Database DevOps, while incredibly beneficial, comes with its fair share of challenges. Some of the top challenges in implementing Database DevOps include: Successfully addressing these challenges involves a combination of technology, processes, and a cultural shift toward collaboration and automation between development and operations teams. How can DevOps help in solving the above challenges? DevOps practices can help address many of the challenges associated with Database DevOps by promoting collaboration, automation, and a systematic approach to managing database changes. Here’s how DevOps can assist in solving the problems mentioned: By combining DevOps practices with these tools and examples, organizations can enhance their Database DevOps capabilities, streamline database management, and achieve more efficient, secure, and reliable database operations. Top Database DevOps Tools Open-Source Database DevOps Tools: Paid Database DevOps Tools: These tools cater to different database systems, such as MySQL, PostgreSQL, Oracle, SQL Server, and more. The choice of tool depends on your specific database technology, project requirements, and budget. It’s essential to evaluate each tool’s features, compatibility, and community/support when selecting the right one for your Database DevOps needs.